Recent advances in speech emotion recognition (SER) have showcased the transformative potential of deep learning algorithms across various fields, including security, healthcare, and human-computer interaction. As technology evolves, the deployment of convolutional neural network long short-term memory (CNN-LSTM) models has become prevalent. These models analyze spoken language to detect emotional nuances, which can greatly enhance user experience and understanding. However, the very sophistication that makes SER promising also renders these models vulnerable to adversarial attacks — deliberately crafted inputs designed to trick the algorithms into making incorrect predictions.

A groundbreaking study by researchers from the University of Milan sheds light on the nature and impact of these adversarial attacks within SER systems, published in the journal Intelligent Computing. The study differentiated between two central types of attacks: white-box and black-box. In white-box attacks, the attacker has comprehensive knowledge of the model’s architecture and parameters, facilitating the design of targeted adversarial examples. Conversely, in black-box attacks, limited insight into the model’s inner workings challenges attackers to devise methods based solely on observable outcomes. This study rigorously explored these attack types across different languages and gender spectrums, revealing that all forms examined significantly compromised the performance of SER models.

The researchers applied varied methodologies to analyze the vulnerabilities of SER systems, working with three datasets: EmoDB for German, EMOVO for Italian, and RAVDESS for English. A diverse range of adversarial techniques was employed, including the Fast Gradient Sign Method and the Basic Iterative Method for white-box attacks as well as the One-Pixel Attack and Boundary Attack for black-box scenarios. Surprisingly, the black-box Boundary Attack produced results that rivaled those achieved by white-box methods, sometimes even outperforming them in terms of efficiency and disruption. This finding is particularly alarming because it indicates that an attacker may execute highly impactful attacks without in-depth knowledge of the model, raising concerns about the real-world implications of these vulnerabilities.

Delving deeper, the research adopted a gender-based perspective, examining how adversarial attacks influenced male versus female speech across the three languages. Results indicated that English was the most susceptible language to these attacks, while Italian showed the greatest resilience. Male speech samples exhibited slightly lower accuracy and greater susceptibility to perturbations, particularly under white-box attack scenarios. The researchers noted that the variations between male and female samples were not significant enough to draw definitive conclusions, but they nonetheless provided critical insights into how SER systems may operate differently depending on speech attributes.

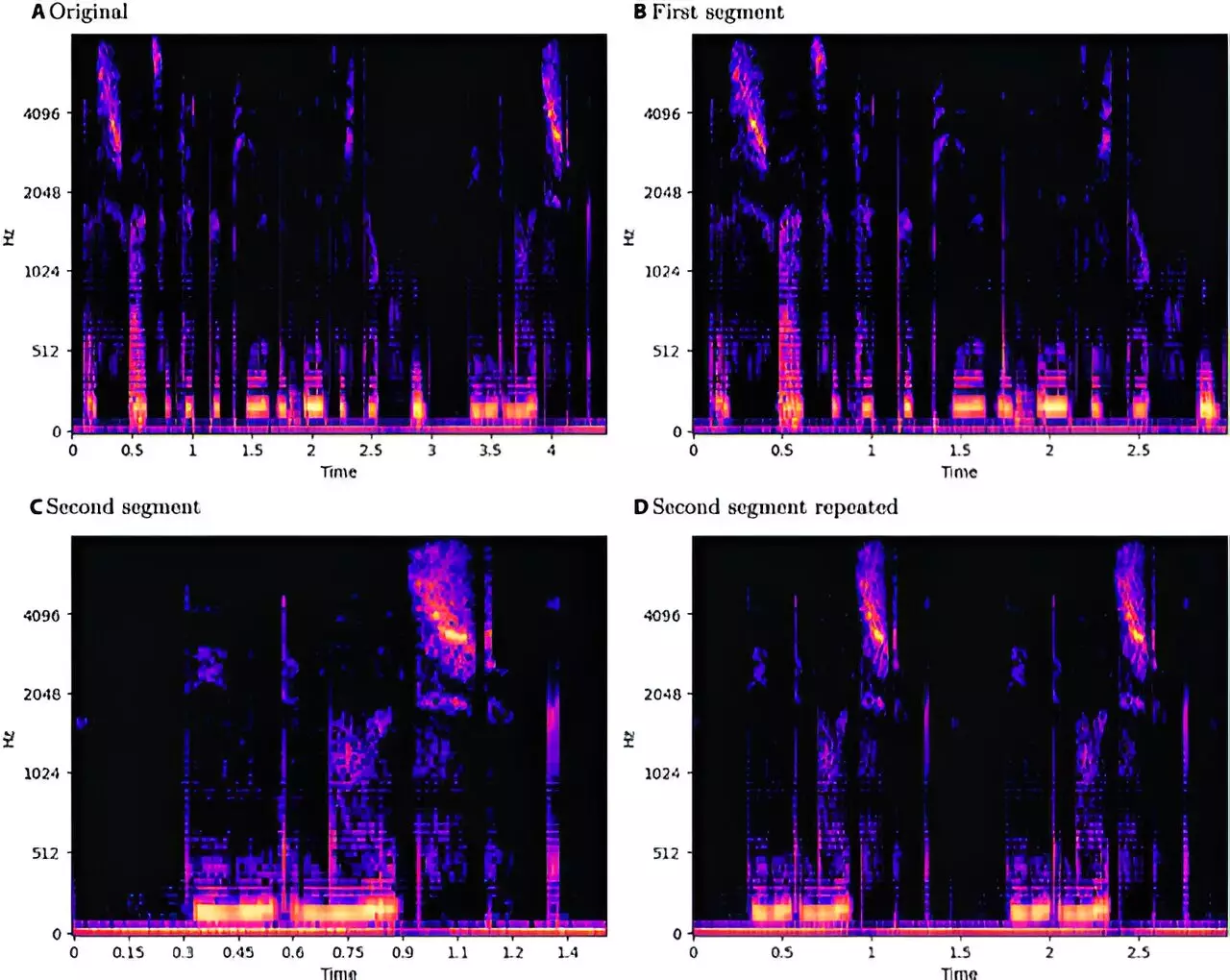

To ensure consistency and reliability, the researchers developed a comprehensive pipeline that standardized data processing across different linguistic datasets, utilizing log-Mel spectrograms for feature extraction. Processes like pitch shifting and time stretching were employed to augment the datasets, keeping the maximum sample duration at three seconds. This methodological rigor ensured that the analysis accurately represented the vulnerabilities that exist within SER systems, allowing for more informed discussions about how to mitigate these risks.

The implications of this study extend far beyond the research community. Highlighting the vulnerabilities of SER models raises essential discussions regarding how to secure these systems against adversarial threats. While some may argue that revealing weaknesses could aid malicious actors, the authors emphasize that withholding this information may be more harmful. Transparency fosters a better understanding of these risks, enabling both defenders and analysts to develop robust strategies for defending against potential exploits.

The research conducted at the University of Milan marks a pivotal moment in the ongoing conversation surrounding the security of speech emotion recognition systems. While these systems hold immense promise, the persistent threat of adversarial attacks demands immediate and sustained attention. By acknowledging and addressing these vulnerabilities, we strive towards a more secure technological landscape, ultimately harnessing the full potential of SER while safeguarding it from exploitation. The road ahead requires collaboration among researchers, practitioners, and policymakers to fortify the systems that are becoming integral to our digital interactions.

Leave a Reply