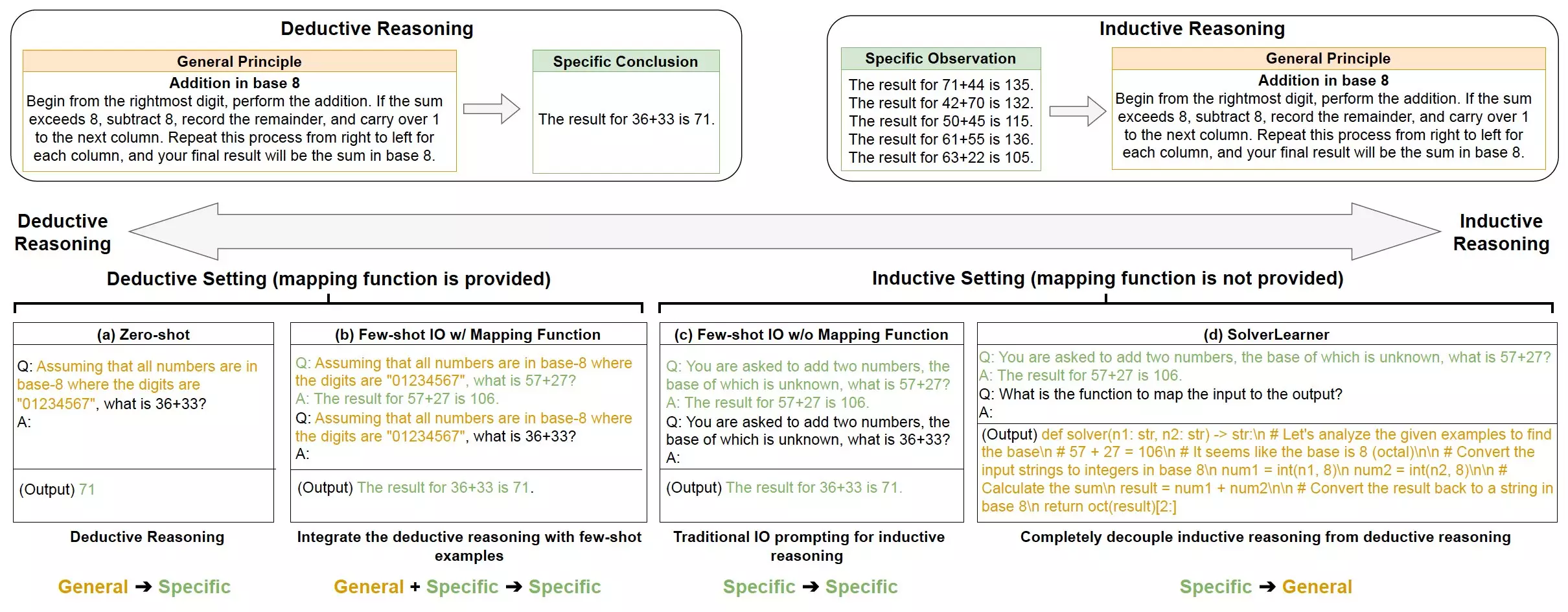

Reasoning is a complex cognitive process that involves the ability to process information and draw conclusions or solve problems. There are two main categories of reasoning that human beings use: deductive reasoning and inductive reasoning. Deductive reasoning starts from a general rule or premise and then uses that rule to draw conclusions about specific cases. On the other hand, inductive reasoning involves generalizing based on specific observations.

While past research studies have focused on how humans use deductive and inductive reasoning in their everyday lives, there has been limited exploration of how artificial intelligence (AI) systems employ these reasoning strategies. A recent study conducted by a research team at Amazon and the University of California Los Angeles delved into the reasoning abilities of large language models (LLMs), which are AI systems that can process, generate, and adapt texts in human languages.

The study found that LLMs exhibit strong inductive reasoning capabilities but often struggle with deductive reasoning. Specifically, they perform well on tasks involving inductive reasoning, such as making general predictions based on specific examples. However, their deductive reasoning abilities are lacking, especially when faced with scenarios that deviate from the norm or are based on hypothetical assumptions.

To differentiate between inductive and deductive reasoning in LLMs, the researchers introduced a new model called SolverLearner. This model employs a two-step approach that separates the process of learning rules from applying them to specific cases. By using external tools like code interpreters, the model avoids relying on the LLM’s deductive reasoning capability.

The findings of the study have important implications for the development of AI systems. Understanding the strengths and weaknesses of LLMs in terms of reasoning can help developers leverage their strong inductive capabilities for specific tasks. For example, when designing chatbots or other agent systems, it may be more beneficial to focus on utilizing the inductive reasoning abilities of LLMs.

Future research in this area could explore how the ability of an LLM to compress information relates to its inductive reasoning capabilities. By further investigating the reasoning processes of LLMs, researchers may be able to improve their overall performance and enhance their utility in various applications.

The study sheds light on the importance of understanding and harnessing the reasoning abilities of artificial intelligence systems. By recognizing the strengths and weaknesses of LLMs in terms of inductive and deductive reasoning, developers can optimize their use in different contexts and drive advancements in AI technology.

Leave a Reply