In recent years, Large Language Models (LLMs) such as OpenAI’s ChatGPT have surged in both capability and presence, permeating various aspects of daily life. These advanced artificial intelligence systems are designed to analyze and generate human-like text based on extensive datasets. While LLMs present exciting opportunities for enhancing communication and decision-making, they also pose distinct risks that could threaten the very fabric of human collaboration and collective intelligence. A significant recent study published in *Nature Human Behaviour*, led by researchers from Copenhagen Business School and the Max Planck Institute for Human Development, explores this duality, offering insights into how LLMs could be calibrated to serve as tools that elevate human collective efforts.

Collective intelligence refers to the collective capability that emerges when individuals share knowledge, skills, and experiences to solve problems more effectively than they could individually. This phenomenon can be seen across various platforms, from collaborative projects like Wikipedia to traditional corporate settings. Collective intelligence thrives on diversity; it brings together a multitude of viewpoints that contribute to richer and more innovative solutions. People have always relied on groups to make informed decisions or find solutions to complex problems, leveraging knowledge in ways that individual expertise might not cover. As we continue to interact with LLMs, it is crucial to explore their implications on this widely recognized phenomenon.

The researchers underline several transformative benefits that LLMs can bring to collective intelligence. One of the most notable advantages is accessibility. By employing advanced translation services and sophisticated writing aids, LLMs can eliminate barriers that often hinder participation in group discussions. This is particularly beneficial for diverse teams where language or communication style variability might otherwise impede effective collaboration.

Moreover, LLMs possess the potential to expedite ideation and facilitate consensus-building in various contexts. They can distill varying opinions, synthesize information, and highlight key areas of agreement, streamlining discussions that might otherwise stall due to disagreements or misunderstandings. Ralph Hertwig, one of the study’s co-authors, emphasizes the need to balance maximizing the benefits while being cautious of the associated risks in a landscape increasingly influenced by these models.

Despite the positive features of LLMs, the study elucidates several significant risks that users and stakeholders must consider. A pressing concern is the potential for reduced motivation to engage in community knowledge repositories, like Wikipedia or Stack Overflow. An increasing reliance on LLMs could detract from the individual contributions of users, altering the dynamics of knowledge sharing and collaboration.

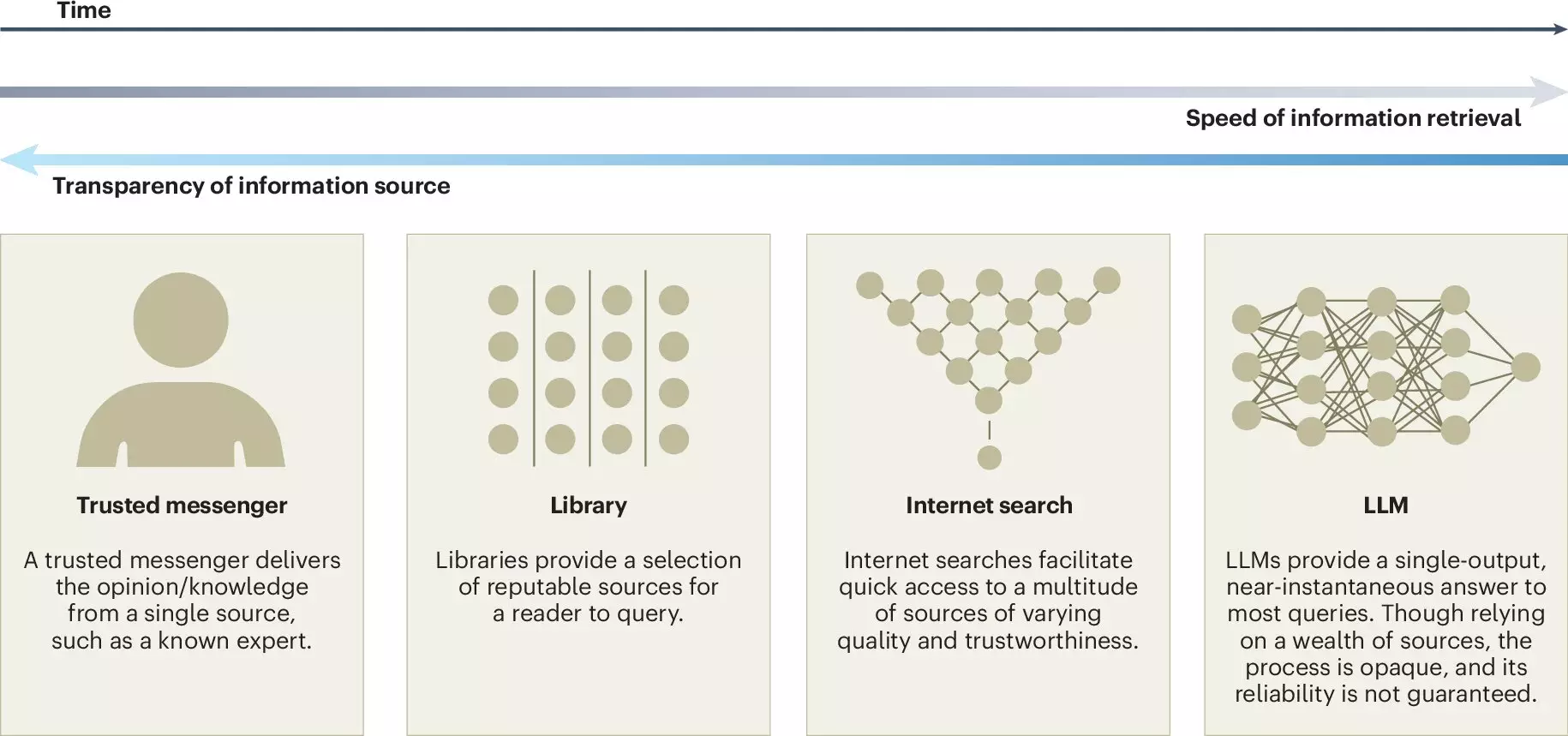

Additionally, the potential for a false sense of consensus is particularly alarming. LLMs aggregate information from existing online content, which may inadvertently amplify majority viewpoints while silencing minority opinions. This risk can lead to a situation known as pluralistic ignorance, where a community collectively misinterprets its members’ attitudes and beliefs. As Jason Burton, the lead author, notes, reliance on LLM-generated content may foster a misleading perception of agreement, skewing the diversity of discourse.

To navigate this complex landscape, researchers advocate for transparency in the development of LLMs. Establishing clear guidelines on data sources and the methodologies used in training these models is imperative. Such measures can ensure that LLMs are constructed in a way that reflects the current social landscape, rather than homogenizing knowledge. Furthermore, they suggest implementing external audits to monitor and assess the effects of these models on collective intelligence effectively.

The study also poses several essential questions for future research, including how to preserve the integrity of diverse viewpoints within collective knowledge frameworks and how accountability should be enforced when LLMs assist in co-created outcomes. This kind of reflective inquiry is vital as it will guide developers and researchers in crafting LLMs that augment rather than undermine human collaboration.

As we continue to explore the functionalities of LLMs, it is evident that careful consideration and proactive measures are essential to harness their potential while safeguarding the principles of collective intelligence. Striking a delicate balance between leveraging technological advancements and preserving the invaluable aspects of human collaboration will determine whether these models act as partners in problem-solving or obstacles to authentic engagement. The journey ahead requires vigilance, creativity, and a commitment to fostering an inclusive environment where every voice is heard.

Leave a Reply