Recent advances in quantum computing promise to change the landscape of technology as we know it. Researchers are tirelessly exploring the limits of quantum systems, striving to uncover the true potential of this revolutionary computing model. Among the pivotal breakthroughs, an innovative classical algorithm has emerged from collaborative efforts between the University of Chicago’s Department of Computer Science, the Pritzker School of Molecular Engineering, and Argonne National Laboratory. This groundbreaking work simulates Gaussian boson sampling (GBS) experiments, raising crucial questions about the interplay between classical and quantum computing.

Gaussian boson sampling has emerged as a strong contender in the race to demonstrate quantum advantage—the ability of a quantum computer to perform computations that far exceed those possible with classical machines. Although quantum computing has been theoretically established to outperform its classical counterpart, recent studies have shown that practical implementations involve myriad challenges, notably noise and photon loss during experiments. Such complications lead to vital inquiries about the efficacy and accuracy of GBS outputs, calling into question previously asserted claims of quantum superiority.

Assistant Professor Bill Fefferman, a key contributor to this research, highlights the crux of the issue: understanding how noise affects the performance of quantum experiments. While many researchers have shown promising results with quantum devices producing outputs that align with GBS predictions, they have also noted that noise often muddles these results, obscuring the true quantum advantage.

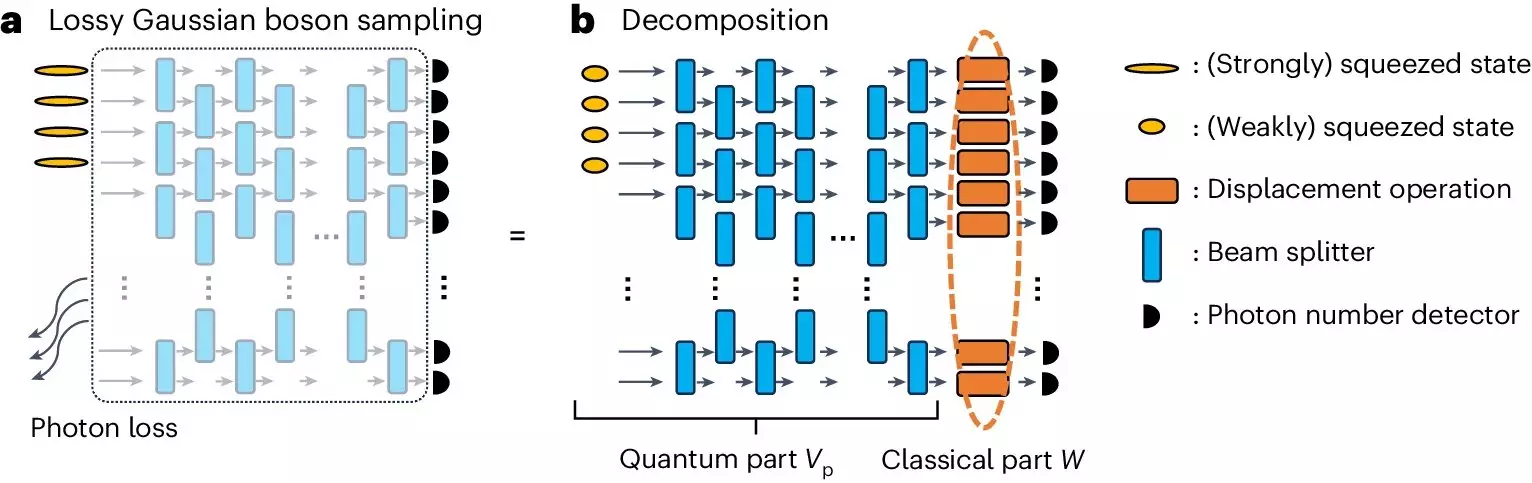

At the heart of the recent breakthrough lies a classical tensor-network algorithm designed to tackle the complexities introduced by noise in GBS experiments. By strategically capitalizing on high photon loss rates typical in contemporary GBS experiments, this new simulation demonstrates remarkable efficiency and accuracy, offering a fresh approach to understanding the underlying mechanisms of quantum information processing.

Interestingly, the researchers found that their classical simulation often outperformed several state-of-the-art GBS experiments across a range of benchmarks. This finding suggests that the limitations of current quantum experiments might not stem from an inherent failure of quantum computing but point to an opportunity to refine our understanding and optimize future quantum systems.

Fefferman emphasizes that this is not merely a critique of quantum technology; rather, it opens avenues for improvement and promises to strengthen quantum algorithms, enhancing the utility of quantum computing.

The ramifications of more effective GBS simulations extend well beyond quantum computing itself, influencing diverse fields such as cryptography, materials science, and pharmaceuticals. Quantum technologies hold significant promise for innovating secure communication methods, revolutionary applications in materials discovery, and strategies for drug development. As advancements in quantum computing are realized, industries are poised to fundamentally transform their operational frameworks and address complex challenges more efficiently.

Moreover, with the rise of quantum capabilities, organizations can seek optimization in supply chain operations, bolstering the efficiency of artificial intelligence frameworks and enhancing climate modeling processes. The collaboration between classical and quantum computing is crucial in realizing these advancements, leveraging the strengths of both models to achieve unprecedented breakthroughs.

Collaboration and Continued Research

Fefferman’s work, which involves collaboration with noted figures like Professor Liang Jiang and former postdoc Changhun Oh, epitomizes the collective effort driving this innovative research forward. Their multi-phase inquiries have been central in revealing the intricacies of noisy intermediate-scale quantum (NISQ) technology, with notable work on lossy boson sampling and the impact of noise on quantum supremacy demonstrations.

Through a series of comprehensive studies, they have elucidated the often-overlooked aspects of photon loss and its influence on the comparative efficiency of classical simulations. Their recent contributions extend to exploring challenges associated with GBS and proposing new architectures that enhance programmability and resistance to photon loss, thereby enhancing the feasibility of large-scale experiments.

Furthermore, the researchers have ventured into classical algorithms inspired by quantum methodologies to approach complex graph-theoretical problems and molecular chemistry scenarios. Collectively, these findings challenge the traditional understanding of quantum claims, suggesting there may be less potency in quantum methods than previously believed.

As the quantum landscape continues to evolve, the development of classical algorithms that can effectively simulate GBS marks a monumental stride toward bridging the divide between the two computing paradigms. This research not only bolsters our comprehension of Gaussian boson sampling but also underscores the need for ongoing exploration and collaboration in both quantum and classical realms.

Enhanced understanding derived from effective simulation fuels the journey toward realizing powerful quantum technologies, ultimately equipping us to tackle the complexities of modern usage across a variety of sectors. As researchers delve deeper into this quantum-classical interplay, the potential for innovation grows exponentially, hinting at a future where quantum computing fulfills its promise in transforming everyday challenges into manageable opportunities.

Leave a Reply