Artificial intelligence (AI) has made significant strides in recent years, primarily driven by advancements in machine learning and the vast amounts of data available for processing. Despite these advancements, AI models, such as ChatGPT, operate on predefined algorithms and are fundamentally constrained by their data-processing capabilities. A critical challenge that looms large in the field of AI is the von Neumann bottleneck, a limitation in computing architecture that hampers the efficiency of data handling. Researchers, including a notable team led by Professor Sun Zhong from Peking University’s School of Integrated Circuits and Institute for Artificial Intelligence, have turned their attention to addressing this issue.

The von Neumann bottleneck arises from the traditional separation of memory and processing units in computational architecture, leading to inefficiencies as data must be transported between these units. The burgeoning scale of datasets exacerbates this challenge, as the speed at which data is moved often lags behind the rate at which it can be processed. This imbalance results in performance constraints that hinder the potential of AI models, which rely heavily on data operations, specifically matrix-vector multiplication (MVM), integral to the functioning of neural networks.

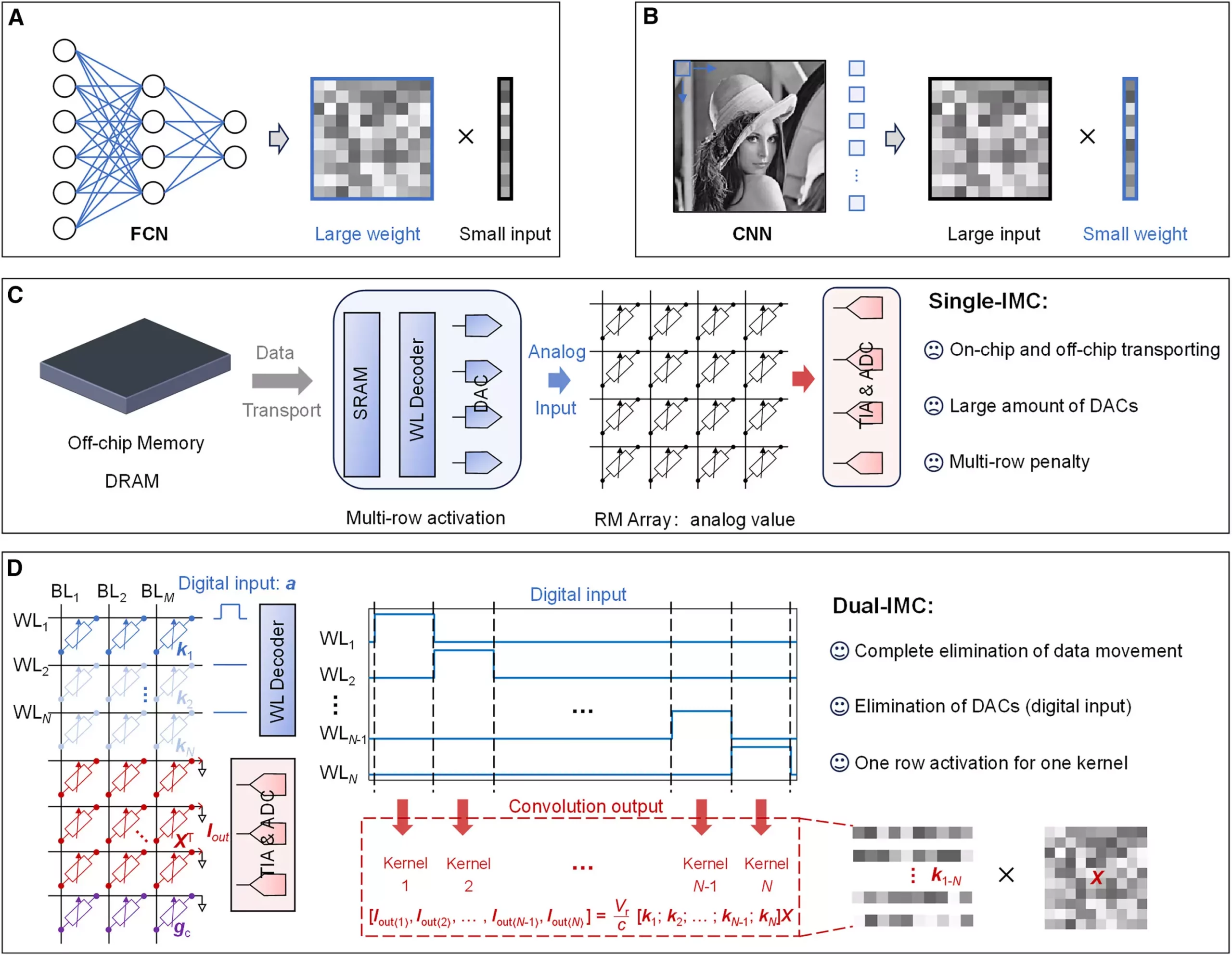

In a groundbreaking study published on September 12, 2024, in the journal Device, Professor Zhong and his team unveiled a novel dual-in-memory computing (dual-IMC) scheme. This innovative architecture is tailored to overcome the limitations faced by traditional single-in-memory computing (single-IMC) methods. In conventional single-IMC solutions, neural network weights are stored within memory chips, while external inputs are provided separately, often necessitating cumbersome data transfers between on-chip and off-chip environments.

The dual-IMC scheme offers a paradigm shift by allowing both the weights and inputs of neural networks to be stored in the same memory array. This development enables data operations to occur entirely in-memory, substantially reducing the energy and time costs associated with the conventional data movement that plagues traditional computing systems. The elimination of the reliance on digital-to-analog converters (DACs) further enhances this approach, minimizing circuit complexity and power consumption while improving efficiency.

The implications of the dual-IMC scheme are profound. By allowing for greater efficiency in computational processes, this approach addresses the heart of the von Neumann bottleneck. The researchers conducted tests using resistive random-access memory (RRAM) devices in areas such as signal recovery and image processing, revealing significant advantages.

One of the striking benefits is the enhanced energy efficiency witnessed during matrix-vector multiplication operations. As fully in-memory computations become the norm, the delays and energy expenditures associated with off-chip dynamic random-access memory (DRAM) and on-chip static random-access memory (SRAM) vanish. Consequently, performance scales as data movement is eliminated altogether, fostering rapid data processing capabilities that can keep pace with today’s data-intensive demands.

Moreover, the reduction in production costs associated with the dual-IMC scheme signals a promising avenue for the future of AI computing. By dispensing with the need for DACs, the dual-IMC architecture not only conserves valuable chip area but also diminishes computing latency and lowers overall power requirements.

The growing need for efficient data processing solutions in an increasingly digital world cannot be overstated. As Professor Zhong and his team highlight, the research underpinning the dual-IMC approach stands to unlock new frontiers in computing architecture and artificial intelligence. The potential for this innovation lies in its ability to reshape how neural networks operate, leading to faster, more efficient AI systems capable of managing large-scale datasets with ease.

The transformative research led by Professor Zhong represents a crucial step toward resolving the inherent limitations posed by the von Neumann bottleneck. As industries continue to grapple with the demands of data processing, innovations such as the dual-IMC scheme will be vital in creating the agile, powerful AI systems of tomorrow. This advancement underscores the importance of ongoing research in enhancing computational paradigms, ensuring that we are prepared for the challenges of an information-rich future.

Leave a Reply