The advent of artificial intelligence (AI) has sparked a global dialogue around its potential risks and rewards, especially in areas that directly impact personal safety and privacy. On August 1, 2023, the European Union took a significant step in this conversation by implementing its AI Act, which functions as a regulatory framework governing the deployment and development of AI technologies. This legislation aims not only to safeguard users but to hold developers accountable for the systems they create, particularly those classified as high-risk. A recent study led by experts from Saarland University and Dresden University of Technology has investigated how this regulatory framework influences the day-to-day activities of software developers navigating this new landscape.

The European AI Act represents a crucial evolution in how AI technologies are perceived and managed within the digital marketplace. Professor Holger Hermanns, a pioneer in computer science at Saarland University, asserts the Act acknowledges that while AI can significantly enhance efficiency, it can also introduce dangers, especially when woven into the fabric of sensitive sectors like healthcare and employment. This multifaceted understanding of AI’s capabilities calls for a balanced approach, one which aims to harness its potential while mitigating harm.

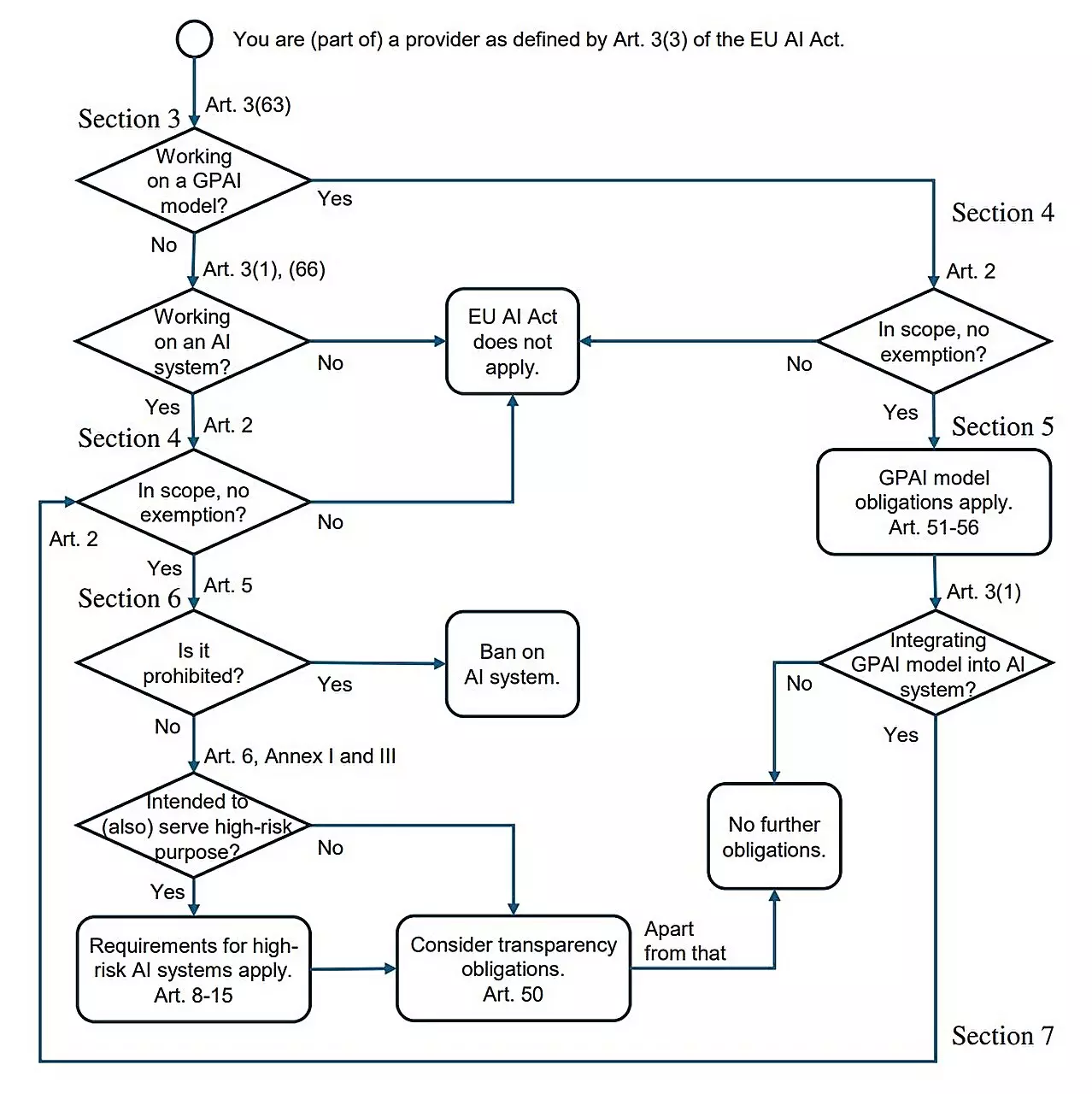

One of the primary questions resonating among developers is the practical implications of this legislation: “What do I really need to know?” With the AI Act comprising 144 dense pages of legal jargon, it can be daunting for many programmers who may lack the time or the legal expertise to fully comprehend its contents. The recent research titled “AI Act for the Working Programmer” provides clarity on these issues, summarizing the Act’s main tenets and elucidating a roadmap for compliance without overwhelming developers.

A striking insight from this research indicates that the majority of software developers may experience minimal disruption in their workflows. The Act’s provisions primarily become significant when dealing with high-risk AI systems, such as those utilized for hiring processes or in the medical field. For example, if a developer creates a recruitment AI tool that assesses job applicants, the regulatory requirements come into play as soon as the software is deployed. Compliance with the AI Act entails rigorous standards regarding data sources, transparency, and the overall functionality of the AI.

On the contrasting side, low-risk AI applications—those utilized in video games or basic spam-filtering algorithms—are generally exempt from the stringent requirements imposed by the AI Act. These distinctions underline the legislation’s nuanced approach, illustrating its intention to regulate where it’s most needed without stifling innovation and creative development in less consequential domains.

For developers engaged in creating high-risk AI systems, a clear understanding of the new requirements is essential. They must prioritize fairness in their algorithms by ensuring that the training data used effectively represents diverse demographics. Discriminatory practices stemming from bias in this training data can lead to serious repercussions, not only for users but also for developers facing legal scrutiny.

Moreover, the AI Act mandates thorough documentation of the AI system’s functionality, akin to preparing a detailed user manual. This documentation is critical for ongoing monitoring and evaluation, making it possible to track performance and rectify issues that may arise post-deployment. Hermanns emphasizes the importance of logs, similar to the black boxes in airplanes, which will allow for accountability and transparency in the event of a malfunction or failure.

Despite the constraints introduced by the AI Act, researchers, including Hermanns and his team, largely view this regulation positively. The legislation aims not only to protect consumers but also to provide a stable legal environment that fosters innovation. Crucially, the Act does not inhibit research and development within academic or commercial settings, preserving a pathway for continued exploration of AI’s capabilities in Europe.

While the European Union’s AI Act sets out a significant set of guidelines for the development and deployment of AI technologies, its impact varies significantly between high-risk and low-risk applications. As developers adapt to the evolving regulatory landscape, the onus will be on them to balance compliance with creativity, ensuring that their contributions to AI development benefit society responsibly. The emergence of such comprehensive legislation is a promising step toward creating a more ethical framework for AI use, paving the way for a future where technology and human values can coexist harmoniously.

Leave a Reply