In the realm of artificial intelligence, particularly with large language models (LLMs), accuracy in responses is paramount. As researchers strive to refine these models, the challenge often lies in addressing their limitations when tasked with highly specialized queries. This dilemma is akin to that moment when one has partial knowledge of a topic yet seeks a more comprehensive answer. The solution is typically to consult an expert—a strategy that human beings employ seamlessly in everyday interactions. However, training LLMs to recognize when to “phone a friend” or collaborate with more specialized models has proven to be a nuanced task. Traditionally, the training of such collaborative mechanisms depended on intricate formulas or extensive labeled datasets, both of which are time-consuming and resource-intensive.

Against this backdrop, researchers from the Massachusetts Institute of Technology’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have pioneered a novel synergy between models through their algorithm, Co-LLM. By presenting an organic method for collaboration, Co-LLM represents a significant shift in how LLMs operate, aiming to enhance their performance and efficiency.

Understanding the Co-LLM Mechanism

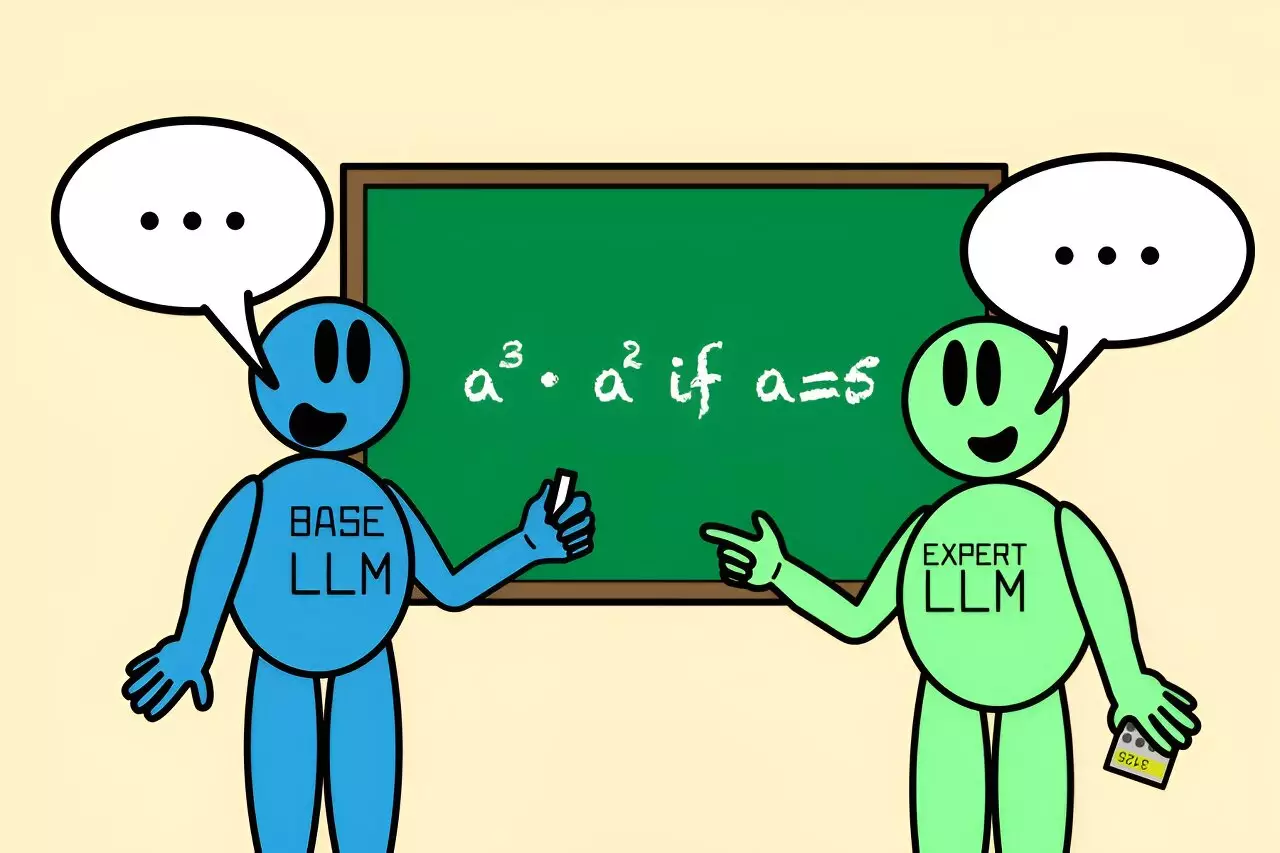

Co-LLM’s core premise lies in the pairing of a general-purpose base LLM with a specialized model, effectively creating a cooperative framework. The base model initiates the generation of responses, while Co-LLM continuously analyzes each token—or word—in the response. The algorithm functions through a “switch variable,” which acts akin to a project manager, determining which tokens are solidly handled by the base model and when an expert model is required to step in.

This intelligent process leads to increased accuracy, particularly valuable in answering specialized questions related to medical inquiries or complex reasoning tasks. For instance, if the model must detail extinct bear species, the base LLM can handle most of the response, while the switch variable identifies specific areas—like the year of extinction—where a more knowledgeable model can provide precise information. The seamless integration not only allows for rich, informed answers but does so with greater efficiency, engaging the specialized model only when necessary.

Training for Collaboration: A New Paradigm

The methodology underpinning Co-LLM extends beyond mere model interaction; it involves training the general-purpose LLM using domain-specific data tailored to mirror the expert model’s expertise. By compiling datasets that highlight the base model’s weaknesses, researchers are able to instruct it when to activate the expert LLM, enhancing the overall integrity of its responses.

For example, consider a query about the ingredients of a particular prescription medication. Here, the general model may offer an inaccurate answer; however, the collaboration with a specialized biomedical model ensures that users receive accurate and trustworthy information, showcasing Co-LLM’s potential impact across various fields, especially in contexts that demand precision.

The strengths of Co-LLM aren’t limited to just factual accuracy. The researchers demonstrated the algorithm’s robustness through mathematical challenges as well. In one instance, the general-purpose model incorrectly computed a math problem due to its reliance on its limited training data. However, when Co-LLM facilitated collaboration with a specialized mathematical model, the duo not only identified the errors but also enhanced the solution’s accuracy. This highlights how Co-LLM transcends the limitations of both models, increasing the reliability of responses in areas where accuracy is critical.

Moreover, this collaboration under Co-LLM is not merely about transferring tasks; it is structured to accommodate the idiosyncrasies of each model. Unlike previous strategies, which required all models to have similar training backgrounds, Co-LLM offers flexibility, positioning itself as a more effective solution to optimizing performance and accuracy.

The research team is already contemplating further enhancements to the Co-LLM framework. Future iterations may include a more sophisticated deferral mechanism allowing the system to backtrack when the expert model provides inaccurate responses. This concept reflects an aspiration to model human-like adaptability, further improving the quality and dependability of outputs.

Projected applications include not only real-time information updates but also assisting with enterprise documents, ensuring that the answers are not only correct but also aligned with the most recent advancements in knowledge. Co-LLM’s capability to potentially enable smaller private models to efficiently interface with larger LLMs signifies its vast implications for multiple industries where information security and accuracy are paramount.

Co-LLM represents a forward-thinking approach to improving LLM collaboration. By fostering a system that mimics human collaborative efforts, this innovative algorithm has the potential to redefine how language models generate accurate, valuable information across domains, ultimately bridging gaps that have long challenged researchers and practitioners alike.

Leave a Reply