Artificial Intelligence (AI) has made significant advancements in recent years, with deep neural networks (DNNs) being at the forefront of this progress. However, as researchers at the University of Notre Dame have highlighted in a recent study published in Nature Electronics, the fairness of AI systems can be compromised due to biases present in the data they are trained on and the hardware platforms they are deployed on. This article will delve into the findings of this study, emphasizing the crucial role that hardware plays in ensuring fairness in AI technologies.

Research conducted by Shi and his team revealed a lack of understanding regarding how hardware design influences the fairness of AI systems. The study focused on emerging hardware designs, such as computing-in-memory (CiM) devices, and their impact on the fairness of DNNs. Through a series of experiments, the researchers aimed to uncover the relationship between hardware and fairness in AI deployments.

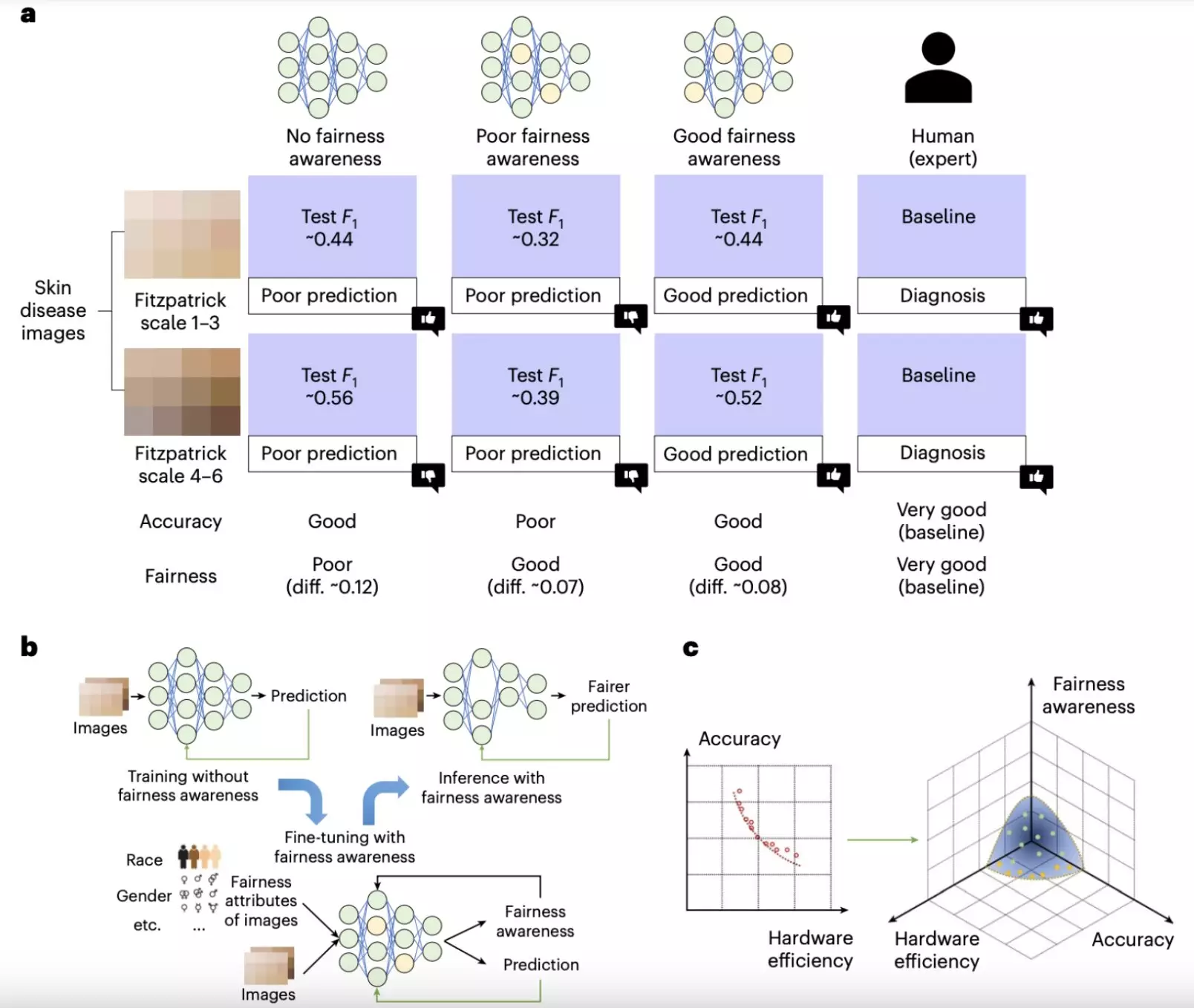

One of the key findings of the study was the influence of hardware-aware neural architecture designs on the fairness of AI models. The researchers discovered that larger, more complex neural networks tended to exhibit greater fairness, albeit at the cost of requiring more advanced hardware. While these models showed improved performance, they posed challenges in terms of deployment on resource-constrained devices.

In light of the challenges posed by larger neural networks, the research team proposed potential strategies to increase the fairness of AI without significantly increasing computational demands. One such solution involved compressing larger models to balance performance and computational load. Additionally, the researchers emphasized the importance of noise-aware training strategies to enhance the robustness and fairness of AI models.

The study also highlighted the impact of hardware-induced non-idealities, such as device variability and stuck-at-fault issues, on the fairness of AI models. Through experiments, the researchers identified trade-offs between accuracy and fairness under different hardware setups. They recommended the use of noise-aware training strategies to mitigate these challenges and ensure fair AI deployments.

Looking ahead, Shi and his colleagues plan to delve further into the intersection of hardware design and AI fairness. They aim to develop advanced frameworks that optimize neural network architectures for fairness while considering hardware constraints. Additionally, the researchers intend to explore adaptive training techniques to address the variability and limitations of different hardware systems, ultimately paving the way for the development of AI systems that are both accurate and equitable.

The study underscores the importance of considering hardware design alongside software algorithms in the development of fair AI technologies. By focusing on both hardware and software components, developers can create AI systems that yield equally good results across diverse user demographics. The findings of this research have significant implications for the future of AI, urging a holistic approach to ensuring fairness in artificial intelligence.

Leave a Reply