In a groundbreaking study by the Alberta Machine Intelligence Institute (Amii), researchers have shed light on a pressing issue in the realm of machine learning. The research, documented in a paper titled “Loss of Plasticity in Deep Continual Learning” published in Nature, unveils a phenomenon that poses a significant roadblock to the development of advanced artificial intelligence systems capable of functioning effectively in real-world scenarios.

The Discovery of Loss of Plasticity

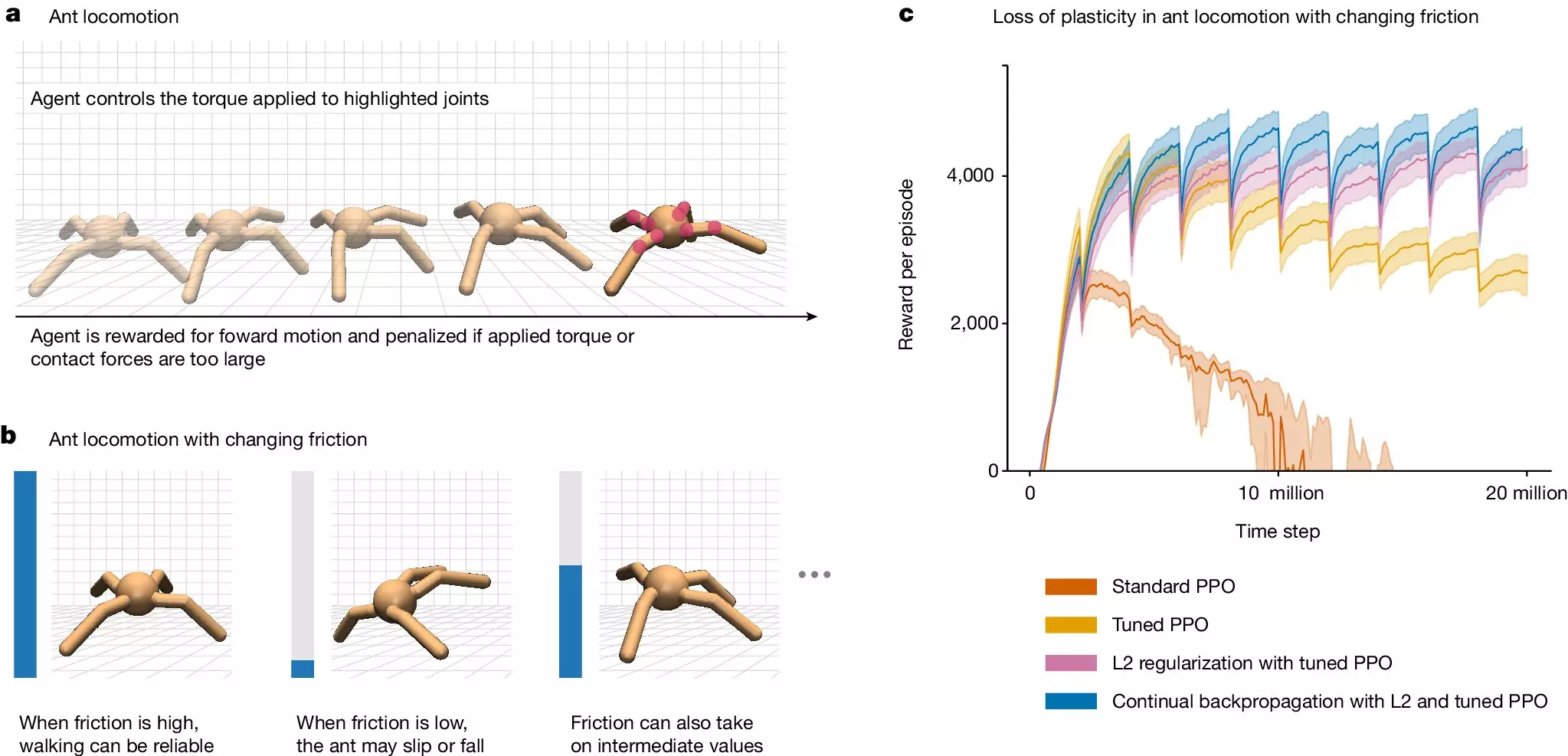

The team of researchers delves into a perplexing problem that has long plagued deep learning models but has largely escaped widespread attention. Referred to as the “loss of plasticity,” the issue entails a decline in the learning capabilities of deep learning agents engaged in continual learning. Lead researcher A. Rupam Mahmood emphasizes the profound implications of this discovery, noting that not only do AI agents lose the capacity to acquire new knowledge, but they also struggle to relearn previously acquired information after it has been forgotten.

The researchers draw parallels between the loss of plasticity in deep learning models and the concept of neural plasticity in neuroscience, where the brain’s ability to adapt and forge new neural connections is essential for learning and memory retention. They underscore that addressing loss of plasticity is paramount for the advancement of AI systems capable of navigating the complexities of the real world and achieving human-level intelligence.

Challenges in Continual Learning

Existing deep learning models are ill-equipped for continual learning, as exemplified by ChatGPT, which undergoes training for a fixed duration and lacks the ability to adapt continuously. Integrating new information with existing knowledge in such models proves arduous, often necessitating a reset of the entire learning process—a time-consuming and costly endeavor, particularly for large-scale models like ChatGPT.

The research team’s exploration of loss of plasticity was motivated by subtle indicators scattered throughout existing literature, hinting at the prevalence of this issue within deep learning frameworks. By conducting a series of experiments in supervised learning scenarios, the researchers conclusively demonstrated the pervasiveness of loss of plasticity, underscoring the urgent need to address this critical challenge.

Towards a Solution

In their quest to mitigate loss of plasticity, the researchers devised a novel approach dubbed “continual backpropagation,” which involves periodically reinitializing underperforming network units to bolster learning efficacy. This method, which modifies traditional backpropagation algorithms to enhance network adaptability, has shown promising results in enabling deep learning models to sustain continual learning over extended periods.

Future Prospects

While the team’s findings represent a significant breakthrough in understanding and potentially overcoming loss of plasticity in deep learning models, lead researcher Richard S. Sutton remains optimistic about the prospect of further innovations in this domain. By bringing attention to the inherent challenges of deep learning systems, the research is poised to catalyze broader efforts to address fundamental issues and propel the field towards more robust and adaptive AI solutions.

The research conducted by the Amii team sheds light on a critical obstacle impeding the progress of deep learning models. By unveiling the pervasive nature of loss of plasticity and proposing innovative solutions to address this challenge, the researchers have not only advanced our understanding of AI limitations but also paved the way for more resilient and adaptable artificial intelligence systems in the future.

Leave a Reply